- Twitter: You can now also find us at Twitter for short but more frequent updates. http://twitter.com/PenemuNXT

- Refreshed design: For the YouTube page (http://www.youtube.com/PenemuNXT) and this blog (http://penemunxt.blogspot.com/).

- New short description: "A SLAM (Simultaneous Localization and Mapping) implementation using LEGO Mindstorms NXT and leJOS (Java)."

- Reorganized Google Code Page: More info at http://code.google.com/p/penemunxt/.

- Map orientation: Rotate the map. This is supposed to rotate the map automatically by using data from the Compass sensor. But we have not implemented this yet, for now you have to rotate it manually.

- New map processors:

- Visualize clear area: Area where there probably are no objects are now displayed as white.

- Background grid: A grid with squares that represents 10x10 cm so that it is easier to calculate lengths.

- Performance optimization

- Export rendered map: You can export a rendered map as PNG or JPEG to share with your friends ;)

2009-12-14

Updates

Some updates

Labels:

clear area,

design,

grid,

orientation,

render map,

twitter

2009-12-09

Map Preview

I have separated the visualization of the map to a separate control.

Because of this it's really easy to reuse it in different ways.

I've done this in three places for now.

Map processors preview

Map timeline preview

If you hover the mouse over the timeline you will see a thumbnail with a preview of the map at that frame.

Map preview

According to me this is the coolest thing. When you are about to open a map from a file you will see a preview of it as you select one.

The next thing to do is to separate the timeline as a separate control so that it is as easy to reuse as the map.

2009-12-06

Map processors

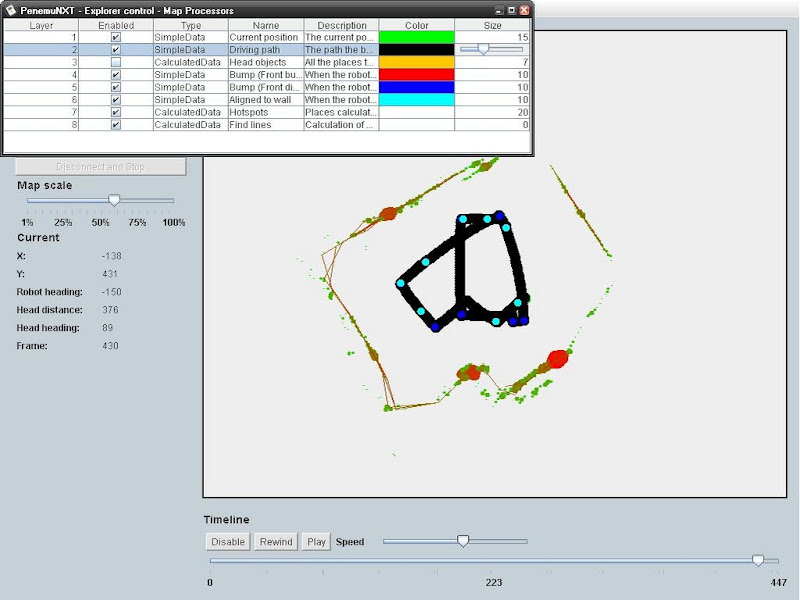

The big news of today is map processors!!

That's a new way we implement how the map is painted/processed.

The idea is that you want to be able to add new ways to improve the map easily.

What I did was to setup the interface IMapProcessor and basically it has some properties for color, name and description and a method to process the data.

You can find the processors we have at the moment here.

They all implement the IMapProcessor interface.

There are two main types of processors, SimpleData and CalculatedData.

* SimpleData is processors that basically just paint dots. For example when the robot drive. It dosn't do any improvements to it.

* CalculatedData is processors that combines different data to calculate different stuff, for example where walls and objects are.

You add all the processors you want to an instance of MapProcessors and call this every time you want to paint the map.

This is how it might look when you configure it:

This is the new admin view for them.

You can easily change size..:

..and colors:

I also made a change so that when you select a row in the dataview the timeline will jump to that frame (and if you drag/play the timeline, the dataview will jump to that frame):

Hopefully we will release a compiled version of the apps soon so you easily can download them and test them by yourself!

/Peter F

That's a new way we implement how the map is painted/processed.

The idea is that you want to be able to add new ways to improve the map easily.

What I did was to setup the interface IMapProcessor and basically it has some properties for color, name and description and a method to process the data.

You can find the processors we have at the moment here.

They all implement the IMapProcessor interface.

There are two main types of processors, SimpleData and CalculatedData.

* SimpleData is processors that basically just paint dots. For example when the robot drive. It dosn't do any improvements to it.

* CalculatedData is processors that combines different data to calculate different stuff, for example where walls and objects are.

You add all the processors you want to an instance of MapProcessors and call this every time you want to paint the map.

This is how it might look when you configure it:

ArrayList defaultProcessors = new ArrayList();

mapProcessorCurrentPos = new MapCurrentPos(Color.GREEN, 10, true);

mapProcessorHotspots = new MapHotspots(15, false);

mapProcessorFindLines = new MapFindLines(true);

defaultProcessors.add(mapProcessorCurrentPos);

defaultProcessors.add(mapProcessorHotspots);

defaultProcessors.add(mapProcessorFindLines);

mapProcessors = new MapProcessors(defaultProcessors); This is the new admin view for them.

You can easily change size..:

..and colors:

I also made a change so that when you select a row in the dataview the timeline will jump to that frame (and if you drag/play the timeline, the dataview will jump to that frame):

Hopefully we will release a compiled version of the apps soon so you easily can download them and test them by yourself!

/Peter F

Labels:

IMapProcessors,

map processing

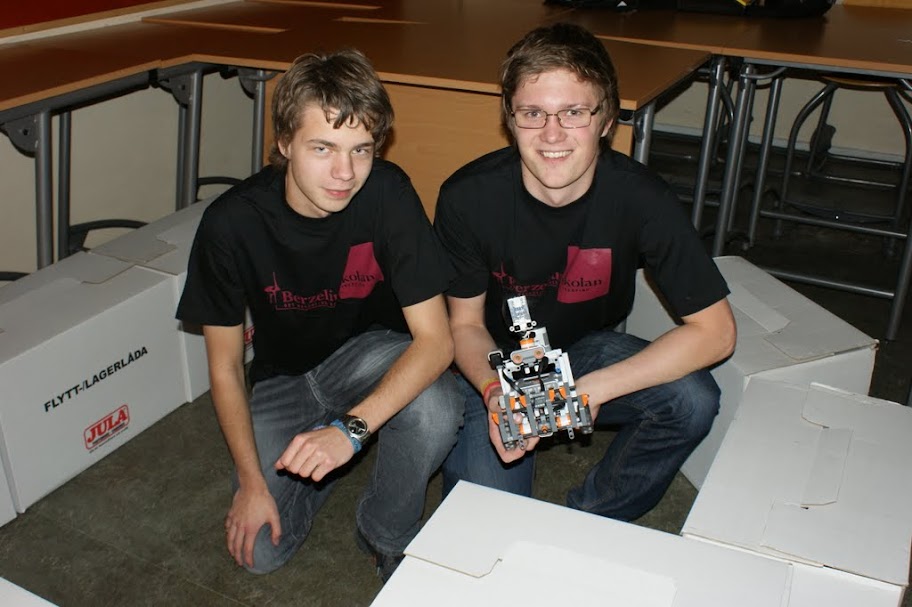

Show at school

We showed the robot and what it can do at our school.

I think most people liked it though some might have been confused by all the dots :)

Here are some pictures from that day.

I think most people liked it though some might have been confused by all the dots :)

Here are some pictures from that day.

2009-11-12

Latest improvements

We've done some improvements to the project, I'll list them below:

/Peter F

- Renaming of the projects

- PenemuNXTExplorer

- PenemuNXTExplorerClient

- PenemuNXTExplorerServer

- PenemuNXTFramework

- PenemuNXTFrameworkClient

- PenemuNXTFrameworkServer

- Rearranged UI

- New colors, logo, menu, margins...

- Timeline

- We can now "play" saved data and go to specific positions in it

- Save and open

- Save and open maps as files (*.penemunxtmap and *.penemunxtmapxml)

- Filters

- A function to filter out irrelevant data and draw lines between the correct data

- View raw data

- A table shows the raw data (sensor values from the robot) we base the map on

- Hotspots

- Shows places on the map where we have accurate data with big red circles, less accurate with small green circles

/Peter F

Labels:

hotspots,

improvments,

open,

save,

UI

2009-11-10

Improvements and show at the school

We've made loads of improvements the last days. The reason is that tomorrow we will show the robot live @ our school for the first time. It's only a demo of the prototype.

Screenshots

I will write a more detailed description of the improvements tomorrow.

If you want to see us demo the robot you should be @ Berzeliusskolan tomorrow (11/11) @ 18.00.

/Peter F

Screenshots

I will write a more detailed description of the improvements tomorrow.

If you want to see us demo the robot you should be @ Berzeliusskolan tomorrow (11/11) @ 18.00.

/Peter F

Labels:

berzeliusskolan,

prottype,

show

2009-11-05

Save and Open map data

I've now implemented a save and open functionality. This enables us to save the raw data (positions, headings, distance etc.) as a XML file that we later on can open and import into the application.

This is a useful function in many ways. We can now work on the server functionality even though we don't have the NXT with us (for example when we are in school). We just use saved data and let the serverapp process this instead of data retrieved by Bluetooth. As it is for now all the data is imported at once but I will implement a emulating functionality. This will add the saved data with the same time delays as it was recorded. This will enable us to play back what happened. I think I will implement this by adding one more connection type. Instead of choosing between USB and Bluetooth you will be given the choice File and instead of passing the name of the NXT you pass a filepath to the file containing raw values.

One of our goals is also to publish the data to a webserver so that a client can connect and see the map being created in real time. The export function is one step towards that.

This is how the new control panel looks like:

This is a useful function in many ways. We can now work on the server functionality even though we don't have the NXT with us (for example when we are in school). We just use saved data and let the serverapp process this instead of data retrieved by Bluetooth. As it is for now all the data is imported at once but I will implement a emulating functionality. This will add the saved data with the same time delays as it was recorded. This will enable us to play back what happened. I think I will implement this by adding one more connection type. Instead of choosing between USB and Bluetooth you will be given the choice File and instead of passing the name of the NXT you pass a filepath to the file containing raw values.

One of our goals is also to publish the data to a webserver so that a client can connect and see the map being created in real time. The export function is one step towards that.

This is how the new control panel looks like:

Intelligent A.I.

So far we have put all the work towards making a prototype that can do the basic goal we have, and we have more or less succeeded. However the AI that controls the robot has so far been pretty stupid to say the least. Simply put it has gone in a straight line until it gets too close to an obstacle and then turned. Now we have started working on something that can manage a little more than that.

The strategy we've planned for the robot is the following:

- Start by either scanning around or simply drive forward until it approaches an obstacle.

- Follow the outline of that obstacle until the robot reaches a position it has already visited.

- If the first obstacle was an object the robot had driven around then let the robot simply drive in another direction and find a new obstacle. If it was the circumference of the area ( such as the walls of a room) the robot will begin scanning the remaining unexplored area until it's sure it has found all objects.

- Eventually we will hopefully implement something which uses the map it has created, such as pathfinding or cover the area in an efficient way (something that could be of use for e.g. automated vacuum cleaners).

This is still a very rough draft and will most likely be subject to significant changes, but it's a start none the less.

Right now we're on the second point: we're working on an algorithm which will follow the curves and turns of a wall or the side of an object. The first iteration will consist of the behavior we already have (turn left when too close to something), a behavior to align the robot parallel to the wall and a behavior to detect when the wall turns away from the robot. If done right this should be enough to work in the vast majority of all cases.

2009-10-18

Improved UI

Today I improved the UI that controls the robot and that displays the map.

It now looks like:

It has support to zoom in the map by scrolling the mousewheel or by draging the slider on the left panel. If you want to pan the map you just have to drag it.

It's quite the same as Google Maps.

The left panel has also been more structured and now you can easily choose between USB/Bluetooth and change what NXT to connect to.

I also refactored the code so that it looks a little bit more structured :)

/Peter F

It now looks like:

It has support to zoom in the map by scrolling the mousewheel or by draging the slider on the left panel. If you want to pan the map you just have to drag it.

It's quite the same as Google Maps.

The left panel has also been more structured and now you can easily choose between USB/Bluetooth and change what NXT to connect to.

I also refactored the code so that it looks a little bit more structured :)

/Peter F

2009-10-17

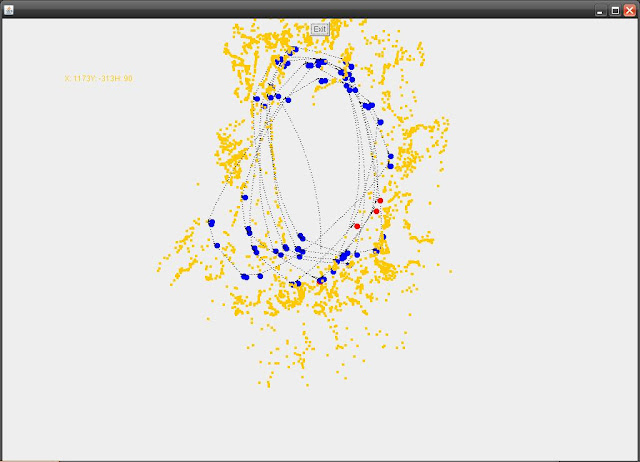

A working prototype!

We are happy to announce that we now have a working prototype. Our robot can scan its surroundings and return data to a server which in turn visualizes it.

This was the try ever in creating a real map, and the results aren't very impressive. Fortunately we've already managed to improve it quite a bit from that, although there is still very much left to be done.

A simple AI controls the robot to reach around to be able to scan. Because of bugs in the current release of leJOS we have been unable to use the Compass Sensor and the as a consequence the accuracy has been suffering. This is something we hope will improve fairly soon.

Also, the AI is so far very simple, but thanks to the behavior based programming we're using, it will be relatively simple to continue working on what we have and expanding it until we some day will have an algorithm which can tackle most problems with reasonable accuracy.

The bar to the left is a control bar which shows some data from the robot and also has options for what you want to be painted. The labels are pretty self-evident, save for "Bumps" which is simply when a behavior has taken over from the default forward in a straight line. Blue indicates that the ultrasonic scanner in front has detected an obstacle and red that the robot has crashed into something with its bumper.

The arena of today was a (roughly) 3 by 1.5 meter rectangle, and you can clearly see the shape of the area, although it's far from perfect. Considering how early in the project we are we're quite satisfied. In fact, we're following our schedule pretty nicely. This far was about what we planned to be by the end of next week.

Now we will continue to work on mainly the AI, to give it an intelligent algorithm instead of the almost random we have now. Naturally we will continue to improve the other aspects as well, so stay tuned!

/Josef and Peter

This was the try ever in creating a real map, and the results aren't very impressive. Fortunately we've already managed to improve it quite a bit from that, although there is still very much left to be done.

A simple AI controls the robot to reach around to be able to scan. Because of bugs in the current release of leJOS we have been unable to use the Compass Sensor and the as a consequence the accuracy has been suffering. This is something we hope will improve fairly soon.

Also, the AI is so far very simple, but thanks to the behavior based programming we're using, it will be relatively simple to continue working on what we have and expanding it until we some day will have an algorithm which can tackle most problems with reasonable accuracy.

The bar to the left is a control bar which shows some data from the robot and also has options for what you want to be painted. The labels are pretty self-evident, save for "Bumps" which is simply when a behavior has taken over from the default forward in a straight line. Blue indicates that the ultrasonic scanner in front has detected an obstacle and red that the robot has crashed into something with its bumper.

The arena of today was a (roughly) 3 by 1.5 meter rectangle, and you can clearly see the shape of the area, although it's far from perfect. Considering how early in the project we are we're quite satisfied. In fact, we're following our schedule pretty nicely. This far was about what we planned to be by the end of next week.

Now we will continue to work on mainly the AI, to give it an intelligent algorithm instead of the almost random we have now. Naturally we will continue to improve the other aspects as well, so stay tuned!

/Josef and Peter

2009-10-15

New Robot

As promised we have constructed a new robot that probably will be the one, or at least very similar to the final product. It is based on the Explorer robot from Nxtprograms.

Our requirements for the physical units was that it has to be able to rotate around its own axis (a requirement for the leJOS navigation classes) and that the IR sensor has to have a 360 degree field of view. A bonus with this model compared to others we have considered is that the IR sensor rotates directly on a motor. In earlier versions we had to convert between the rotation of the motor and the sensor, something we don't have to worry about anymore.

Aside from changing the sensor on top to our IR sensor, we have added a compass sensor and fitted the Ultrasonic sensor in front instead. We also slightly modified the top motor and sensor so that its rotational axis is between the wheels, something that will facilitate calculating the position of obstacles.

Today we have also done several tests to determine how accurate the navigation classes are, and the results are somewhat disappointing. For some reason navigation with the help of the compass sensor is actually less accurate than a simple tacho navigator that only uses the rotation of the wheels to navigate. Also, bugs with both classes severely limits the amount of methods we can use and still maintain a reasonable accuracy. For now we have settled on using Forward(), Backward() and Rotate(), which is accurate enough for our needs. Hopefully we (or leJOS, which is still in beta after all) will solve some of the problems and we won't limited to these forever.

/Josef and Peter

Our requirements for the physical units was that it has to be able to rotate around its own axis (a requirement for the leJOS navigation classes) and that the IR sensor has to have a 360 degree field of view. A bonus with this model compared to others we have considered is that the IR sensor rotates directly on a motor. In earlier versions we had to convert between the rotation of the motor and the sensor, something we don't have to worry about anymore.

Aside from changing the sensor on top to our IR sensor, we have added a compass sensor and fitted the Ultrasonic sensor in front instead. We also slightly modified the top motor and sensor so that its rotational axis is between the wheels, something that will facilitate calculating the position of obstacles.

Today we have also done several tests to determine how accurate the navigation classes are, and the results are somewhat disappointing. For some reason navigation with the help of the compass sensor is actually less accurate than a simple tacho navigator that only uses the rotation of the wheels to navigate. Also, bugs with both classes severely limits the amount of methods we can use and still maintain a reasonable accuracy. For now we have settled on using Forward(), Backward() and Rotate(), which is accurate enough for our needs. Hopefully we (or leJOS, which is still in beta after all) will solve some of the problems and we won't limited to these forever.

/Josef and Peter

Labels:

accuracy,

compass sensor,

explorer,

navigation,

new robot,

nxt,

nxtprograms

2009-10-07

Communication framework and a broken NXT

When I first bought the NXT the sound from its speakers were not good at all, at first I thought it was supposed to be like that, it's just Lego and probably a cheap speaker. Anyhow just a couple of days later there were no sound from it at all so for a couple of weeks ago I sent it in to be repaired/replaced and now the new one has arrived :) So that's why we haven't been able to do and write especially much the last time.

PenemuNXTFramework 0.1

Anyhow what I have done while the NXT was gone is that I've written a communication framework that's supposed to be a middle layer between the existing communication classes in leJOS and the programmer. It's based at some interfaces that you must implement and then it will handle a lot of stuff for you.

Some key features are:

Queue: Let you to setup a queue of set of data to send. This allows you to send data when the NXT has time, maybe there is much to do at the moment.

Priority: Give priority to a set of data, this means that it will be sent first of all items in the queue and it will be processed first at the receiver. Good to use for shutdown commands.

Consistent: The syntax and classes used is exactly the same ones at the NXT as at the PC.

Choose type: When you setup the communication you specify if to use USB or bluetooth. You only specify it once and you don't have to change anything else.

This is how the base part might look:

A data factory might look like this:

And part of a data processor like this:

So it's not finished yet but soon I hope. Anyhow this will make things much easier for us when we want to share data to the computer and back.

Right now we are rebuilding the robot and hopefully we will upload some pictures later this evening.

We are basing the new model on the Explorer from NXTPrograms.com, it's much more stable the our previous construction.

/Peter Forss

PenemuNXTFramework 0.1

Anyhow what I have done while the NXT was gone is that I've written a communication framework that's supposed to be a middle layer between the existing communication classes in leJOS and the programmer. It's based at some interfaces that you must implement and then it will handle a lot of stuff for you.

Some key features are:

Queue: Let you to setup a queue of set of data to send. This allows you to send data when the NXT has time, maybe there is much to do at the moment.

Priority: Give priority to a set of data, this means that it will be sent first of all items in the queue and it will be processed first at the receiver. Good to use for shutdown commands.

Consistent: The syntax and classes used is exactly the same ones at the NXT as at the PC.

Choose type: When you setup the communication you specify if to use USB or bluetooth. You only specify it once and you don't have to change anything else.

This is how the base part might look:

// Setup data factories

// They produce empty instances of the data objects

NXTCommunicationDataFactories DataFactories = new NXTCommunicationDataFactories(

new ServerMessageDataFactory(), new TiltSensorDataFactory());

// Setup ..

NXTCommunication NXTC = new NXTCommunication(true, DataFactories,

new NXTDataStreamConnection());

// .. and start the communication

NXTC.ConnectAndStartAll(NXTConnectionModes.USB);

// Setup a data processor

// It will be given the incoming queue of data and handle it

ServerMessageDataProcessor SMDP = new ServerMessageDataProcessor(NXTC, DataFactories);

SMDP.start();

//Add some data to the send queue

NXTC.sendData(new TiltSensorData(TS.getXTilt(), TS.getYTilt(), TS.getZTilt()))A data factory might look like this:

public class SensorDataFactory implements INXTCommunicationDataFactory {

@Override

public SensorData getEmptyInstance() {

return new SensorData(NXTCommunicationData.MAIN_STATUS_NORMAL,

NXTCommunicationData.DATA_STATUS_EMPTY);

}

@Override

public INXTCommunicationData getEmptyIsShuttingDownInstance() {

return new SensorData(NXTCommunicationData.MAIN_STATUS_SHUTTING_DOWN,

NXTCommunicationData.DATA_STATUS_ONLY_STATUS);

}

@Override

public INXTCommunicationData getEmptyShutDownInstance() {

return new SensorData(NXTCommunicationData.MAIN_STATUS_SHUT_DOWN,

NXTCommunicationData.DATA_STATUS_ONLY_STATUS);

}

}And part of a data processor like this:

@Override

public void ProcessItem(INXTCommunicationData dataItem,

NXTCommunication NXTComm) {

SensorData SensorDataItem = (SensorData) dataItem;

Acceleration.add(new AccelerationValues(SensorDataItem.getAccX(),

SensorDataItem.getAccY(), SensorDataItem.getAccZ()));

}So it's not finished yet but soon I hope. Anyhow this will make things much easier for us when we want to share data to the computer and back.

Right now we are rebuilding the robot and hopefully we will upload some pictures later this evening.

We are basing the new model on the Explorer from NXTPrograms.com, it's much more stable the our previous construction.

/Peter Forss

Labels:

bot,

broken nxt,

communication,

framework,

nxt,

wheeled robot

2009-09-06

New release of leJOS firmware

I've now updated the robot and the computers to leJOS 0.85.

According to http://lejos.sourceforge.net/ the changes are:

/Peter F

According to http://lejos.sourceforge.net/ the changes are:

- better support and documentation for MAC OS X, including the Fantom USB driver

- a Netbeans plugin

- improved JVM speed and many more amazing improvements by Andy

- support for the new LEGO color sensor in the NXT 2.0 set

- now supports the instanceof keyword

- detection of rechargeable batteries and improved battery indicator

- nanosecond timers and improved timer support with the Delay class.

- % operation on floats and doubles

- Class, including the isAssignableFrom(Class cls) method

- display of LCD screen in ConsoleViewer

- major speed and accuracy improvements to the Math class from Sven

- platform independent lejos.robotics packages

- new navigation proposal (work in progress) that is platform independent, supports more vehicles, has better localization support, and new concepts of pose controllers and path finders

- preliminary support for probabilistic robotics, including general purpose KalmanFilter class using matrix algebra

- reworking of the Monte Carlo Localization classes

- limited java.awt and java.awt.geom classes

/Peter F

Labels:

lejos 0.85,

update

2009-09-05

Old memories

Before NXT there was RIS with RCX and I have two of those.

A couple of years ago me and a friend built two robots that were able to find eachother, dock and then exchange a ball.

One of them has a lamp and the other one has a lightsensor. The "child" scans 360° to find the lightest point and then head for that direction. On his way he is doing small adjustment to always head for the lightest point, in other words the "mother".

Here is a video showing the docking process:

It's a little bit sad that I've lost the latest version of the program that contains the "ball exchanging" part (and I haven't got the time to rewrite it) so the video dosn't show that.

This isn't actually part of PenemuNXT, just fun to show the world :)

/Peter Forss

A couple of years ago me and a friend built two robots that were able to find eachother, dock and then exchange a ball.

One of them has a lamp and the other one has a lightsensor. The "child" scans 360° to find the lightest point and then head for that direction. On his way he is doing small adjustment to always head for the lightest point, in other words the "mother".

Here is a video showing the docking process:

It's a little bit sad that I've lost the latest version of the program that contains the "ball exchanging" part (and I haven't got the time to rewrite it) so the video dosn't show that.

This isn't actually part of PenemuNXT, just fun to show the world :)

/Peter Forss

AI and communication

While we still don't have anything new to show you right now, though that doesn't mean that we're slacking. We've started working on two different things that together should enable us to create a working prototype for our mapping robot.

I (Josef) am working on an AI for the robot so it can navigate through the room while scanning. I tried to create my own navigation class that would calculate the position of the robot based only on the rotation of the wheels, but for some reason it's not working. The algorithm to calculate the angle its facing seems to be working, but only certain values for some reason. Seems like a rounding error somewhere, but I don't know where.

The algorithm to calculate it's position doesn't seem to work at all, even if I use values from the compass sensor. For some reason it never returns any data.

Feel free to check them both out on our Google Code site and come with tips if you have. Right now the code is a bit unstructured however. The algorithm to calculate the angle based on the rotation of the wheels is a comment right now in favor for a similar method that uses the compass sensor instead.

When my own class didn't work I was forced (for the time being anyway) to use the navigation class in leJOS, which unfortunately limits me to use only the methods provided by the class to navigate the robot. I'd rather be able to manipulate the motors at will, for example to be able to follow a wall easily.

I'm using behavior programming for my work, a really smart way of creating AI's, since it's so easy implementing, editing or removing different parts of it. I recommend reading the leJOS tutorial about it if you're interested.

Peter is working to improve our communication, which as you could see in our movie works already, but could be made a lot smoother and more structured. The idea of the new communication class is that you add data that you want to send to a queue. The class will then process one item at a time and send it over either USB or Bluetooth (depending on what you want to use). The receiver will add each received item to a list and you may then process them whenever you want. There is one client (NXT) part and one server (PC) part in this.

Hopefully, when we're done with these things, or at least got something that works, we can combine it with the scanning algorithm we already have and with small tweaks to the Graphic Interface a crude prototype that should be able to move around and scan a room, and in real-time paint it up on a computer screen.

/Josef

I (Josef) am working on an AI for the robot so it can navigate through the room while scanning. I tried to create my own navigation class that would calculate the position of the robot based only on the rotation of the wheels, but for some reason it's not working. The algorithm to calculate the angle its facing seems to be working, but only certain values for some reason. Seems like a rounding error somewhere, but I don't know where.

public double getRobotangle(){

robotnewangle = robotangle - (Math.toRadians(CS.getDegreesCartesian()));

robotangle = (Math.toRadians(CS.getDegreesCartesian()));

leftwheelangle = ((Math.toRadians(Motor.A.getTachoCount())) - leftwheeloldangle);

rightwheelangle = ((Math.toRadians(Motor.B.getTachoCount())) - rightwheeloldangle);

leftdist = ((wheeldiameter*Math.PI)*leftwheelangle/(2*Math.PI));

rightdist = ((wheeldiameter*Math.PI)*rightwheelangle/(2*Math.PI));

return robotangle;

}

The algorithm to calculate it's position doesn't seem to work at all, even if I use values from the compass sensor. For some reason it never returns any data.

public Point getRobotpos() {

float x, y, hypotenuse;

hypotenuse = (float)(Math.sqrt((2*Math.pow((getRobotAverageDist()/robotnewangle),2))

-((2*Math.pow((getRobotAverageDist()/robotnewangle),2))*Math.cos(robotnewangle))));

x = (float)(robotpos.x + (Math.cos(getRobotAverageangle()*hypotenuse)));

y = (float)(robotpos.y + (Math.sin(getRobotAverageangle()*hypotenuse)));

robotpos.x = x;

robotpos.y = y;

return robotpos;

}

Feel free to check them both out on our Google Code site and come with tips if you have. Right now the code is a bit unstructured however. The algorithm to calculate the angle based on the rotation of the wheels is a comment right now in favor for a similar method that uses the compass sensor instead.

When my own class didn't work I was forced (for the time being anyway) to use the navigation class in leJOS, which unfortunately limits me to use only the methods provided by the class to navigate the robot. I'd rather be able to manipulate the motors at will, for example to be able to follow a wall easily.

I'm using behavior programming for my work, a really smart way of creating AI's, since it's so easy implementing, editing or removing different parts of it. I recommend reading the leJOS tutorial about it if you're interested.

Peter is working to improve our communication, which as you could see in our movie works already, but could be made a lot smoother and more structured. The idea of the new communication class is that you add data that you want to send to a queue. The class will then process one item at a time and send it over either USB or Bluetooth (depending on what you want to use). The receiver will add each received item to a list and you may then process them whenever you want. There is one client (NXT) part and one server (PC) part in this.

Hopefully, when we're done with these things, or at least got something that works, we can combine it with the scanning algorithm we already have and with small tweaks to the Graphic Interface a crude prototype that should be able to move around and scan a room, and in real-time paint it up on a computer screen.

/Josef

Labels:

AI,

behaviour,

bluetooth,

communication,

USB

2009-09-01

Description document

Today we published two PDF-documents describing our project PenemuNXT more in detail. One in Swedish and one in English.

You can download them from here:

PenemuNXT - Swedish

PenemuNXT - English

You can download them from here:

PenemuNXT - Swedish

PenemuNXT - English

Labels:

description,

PDF

2009-08-26

2009-08-21

Improved scanner

Except that we had a lot of problems with the USB communication today (which turned out to be due to a driver error) we improved our scanner a lot. Today we used te OpticalDistanceSensor instead of the UltrasonicSensor and it turned out that OpticalDistanceSensor had much better accuracy when you get the distance in an angle. It seems to be like the optical solution is much better then the ultrasonic one.

Anyhow, this is what the test looked like:

Comparison

Comparison between the old sensor and the new one:

OLD (Ultrasonic)

NEW (Optical)

As you can see the new one looks a lot more like the environment the scanner is standing in. The odd lines in th corners are there because there is space between our boxes and the sensor recognizes them. Though there is still more to improve.

We also tested the new Accelerometer/Tiltsensor.

No big test yet, just printing out all info on the display to se that it works.

Enough for today.

/Peter and Josef

Anyhow, this is what the test looked like:

Comparison

Comparison between the old sensor and the new one:

OLD (Ultrasonic)

NEW (Optical)

As you can see the new one looks a lot more like the environment the scanner is standing in. The odd lines in th corners are there because there is space between our boxes and the sensor recognizes them. Though there is still more to improve.

We also tested the new Accelerometer/Tiltsensor.

No big test yet, just printing out all info on the display to se that it works.

Enough for today.

/Peter and Josef

First official project lesson in school

Today is our first offical school lesson for our project. We've received information about how we are supposed to work and what the result is supposed to be. We will soon meet our mentor and discuss our plans.

Later this afternoon we will test a new navigator class and a new Accelerometer/Tiltsensor that we've bought. We will also try to create a simple prototype for our product. With the sensors and robot we have and the new navigation class we're working on we should be able to create something that can follow a wall and map out its' contours without too much trouble.

Labels:

acceleration sensor,

first lesson,

school

2009-07-26

First test of the new sensors

So we finally decided to buy the sensors I told you about a couple of weeks ago.

We bought this from Mindsensors.com:

* High Precision Long Range Infrared distance sensor for NXT (DIST-Nx-Long-v2)

* Multi-Sensitivity Acceleration Sensor v3 for NXT - (ACCL-Nx-v3)

And this from HiTechnic.com:

* NXT Compass Sensor

* NXT Extended Connector Cable Set

And now they've finally arrived to Sweden :)

I've been able to test the Compass and the distance sensor so far, Josef still got the Acceleration Sensor at home and we will test it together when we meet in a couple of weeks.

Anyhow they look really good :) The accuracy of the distance sensor is very high, it gives you the distance in mm and it works good!

I put together a simple bot to test out the CompassNavigatorin LeJos.

We will do some more advanced stuff soon!

We bought this from Mindsensors.com:

* High Precision Long Range Infrared distance sensor for NXT (DIST-Nx-Long-v2)

* Multi-Sensitivity Acceleration Sensor v3 for NXT - (ACCL-Nx-v3)

And this from HiTechnic.com:

* NXT Compass Sensor

* NXT Extended Connector Cable Set

And now they've finally arrived to Sweden :)

I've been able to test the Compass and the distance sensor so far, Josef still got the Acceleration Sensor at home and we will test it together when we meet in a couple of weeks.

Anyhow they look really good :) The accuracy of the distance sensor is very high, it gives you the distance in mm and it works good!

I put together a simple bot to test out the CompassNavigatorin LeJos.

We will do some more advanced stuff soon!

2009-06-30

Useful sensors

This weekend I read the book Develop LeJOS Programs Step by Step by Juan Antonio Breña Moral. It was really good with alot of nice examples of how to do LeJOS stuff. It also included some information not directly connected to LeJOs, like Lego Mindstorms history for example, but this was really entertaining to read.

Anyhow the book included some useful links and one of the brought me to the company Mindsensors. I found a really nice sensor that could improve this project alot. The High Precision Long Range Infrared distance sensor for NXT (DIST-Nx-Long-v2) would allow us to calculate distances between 20 to 150 cm in millimeters!! Thats 10 times better than with the Ultrasonicsensor that only gives us the distance in cm.

I also read that the CompassNavigator in leJOS may take advantage of the compass sensor from HiTechnic. This would be really nice to have because (as i've understood it) the turns won't depend on the tachometer in the motor, instead it will look at the angle the compass returns i.e. it will rotate until it has rotated as much as needed.

Anyhow the book included some useful links and one of the brought me to the company Mindsensors. I found a really nice sensor that could improve this project alot. The High Precision Long Range Infrared distance sensor for NXT (DIST-Nx-Long-v2) would allow us to calculate distances between 20 to 150 cm in millimeters!! Thats 10 times better than with the Ultrasonicsensor that only gives us the distance in cm.

I also read that the CompassNavigator in leJOS may take advantage of the compass sensor from HiTechnic. This would be really nice to have because (as i've understood it) the turns won't depend on the tachometer in the motor, instead it will look at the angle the compass returns i.e. it will rotate until it has rotated as much as needed.

Labels:

book,

compass sensor,

distance sensor

2009-06-21

Going the Right Way

Now we've finally begun to build something that with a bit of imagination remotely resembles our ultimate goal. UltrasonicScanner is a stationary robot which is much like the UltrasonicTest, but now we've integrated communication between the computer and the NXT through Bluetooth and we can let the computer do all the calculation and show the results graphically.

UltrasonicScaner

http://code.google.com/p/penemunxt/source/browse/#svn/trunk/UltrasonicScannerClient/src

http://code.google.com/p/penemunxt/source/browse/#svn/trunk/UltrasonicScannerServer/src

The code is more or less CommunicationTest combined with UltrasonicTest. We've established streaming of both the ultrasonic sensor getDistance() and motor getTachoCount() (which returns the angle from it's original position) and then perform all the necessary calculations serverside. We also have a third "channel" streaming data to allow us to give commands in both directions. This means that both the NXT and the JAVA application have the ability to close both programs.

This is the algorithm we use to calculate the coordinates on the screen based on the data from the NXT, and it's all straightforward mathematics.

As you can see on these pictures the "map" the robot managed to create isn't totally accurate. Apparently the sensor can't give accurate data when facing at an angle to a flat surface, with the result that flat surfaces seems to be curved around the robot. Once we have the robot mobile a lot of this problem should be solved.

If check out our videos you can also see a version before we implemented Bluetooth for it.

This is the final result:

UltrasonicScaner

http://code.google.com/p/penemunxt/source/browse/#svn/trunk/UltrasonicScannerClient/src

http://code.google.com/p/penemunxt/source/browse/#svn/trunk/UltrasonicScannerServer/src

The code is more or less CommunicationTest combined with UltrasonicTest. We've established streaming of both the ultrasonic sensor getDistance() and motor getTachoCount() (which returns the angle from it's original position) and then perform all the necessary calculations serverside. We also have a third "channel" streaming data to allow us to give commands in both directions. This means that both the NXT and the JAVA application have the ability to close both programs.

private Point getScreenPos(int Distance, int Angle) {

Angle += 90;

int x, y;

int distx, disty;

distx = (int) (((Math.min(ULTRASONIC_SENSOR_MAX_DISTANCE, Distance) / (double) ULTRASONIC_SENSOR_MAX_DISTANCE) * (getWidth() / 2)));

disty = (int) ((Math.min(ULTRASONIC_SENSOR_MAX_DISTANCE, Distance) / (double) ULTRASONIC_SENSOR_MAX_DISTANCE) * getHeight());

x = (int) ((distx * Math.cos((Angle) * Math.PI / 180)) + (getWidth() / 2));

x = getWidth() + (x * -1);

y = (int) (-1 * (disty * Math.sin(Angle * Math.PI / 180)) + getHeight());

return new Point(x, y);

}

This is the algorithm we use to calculate the coordinates on the screen based on the data from the NXT, and it's all straightforward mathematics.

As you can see on these pictures the "map" the robot managed to create isn't totally accurate. Apparently the sensor can't give accurate data when facing at an angle to a flat surface, with the result that flat surfaces seems to be curved around the robot. Once we have the robot mobile a lot of this problem should be solved.

If check out our videos you can also see a version before we implemented Bluetooth for it.

This is the final result:

Labels:

bluetooth,

lego,

nxt,

ultrasonic

It's working!

It's astonishing how much you can manage to do in just one day of coding. Or astonishing how long time coding takes, depending on how you look upon it. In one day we've started to familiarize ourselves with the NXT and we've created all kinds of smaller test programs, all of which you can find on our Google Code page.

HelloWorld

http://code.google.com/p/penemunxt/source/browse/#svn/trunk/HelloWorld/src

The first basic program to show that the NXT and leJOS works properly. The same program that every programmer has done at least once during his early career. What it does is to simply write a String on our NXT's screen. The leJOS code for writing on the screen of the NXT is:

LCD.drawString("String",x,y);

UltrasonicTest

http://code.google.com/p/penemunxt/source/browse/#svn/trunk/UltrasonicScannerClient/src

http://code.google.com/p/penemunxt/source/browse/#svn/trunk/UltrasonicScannerServer/src

In this project with its very intuitive name we started playing with the Ultrasonic Sensor. This works like a sonar; it sends out ultrasonic sound and measures the time to the echo. To use the sensor you simply declare the sensor, which then has a number of predetermined methods. Note that you have to specify the port he sensor is located in as well.

UltrasonicSensor sensor = new UltrasonicSensor(SensorPort.S1);

int distance = sensor.getDistance();

LCD.drawInt (distance , 1, 1);

These lines will cause the sensor to measure the distance ahead of it and write out the distance in centimeters on the display.

We used this to create a program that measures the distance in a 180 degrees radius and then write it out on the LCD screen. Here we also used a motor to rotate the ultrasonic sensor. The motors also have a set of predetermined methods, but you don't have to declare them.

class HeadRotator extends Thread {

@Override

public void run() {

Motor.A.setSpeed(40);

while (!this.isInterrupted()) {

Motor.A.rotateTo(-100, true);

Sound.beep();

while (Motor.A.getTachoCount() >= -90 && !this.isInterrupted()) {

}

try {

Thread.sleep(200);

} catch (InterruptedException e) {

}

Sound.beep();

Motor.A.rotateTo(100, true);

while (Motor.A.getTachoCount() <= 90 && !this.isInterrupted()) { } try { Thread.sleep(200); } catch (InterruptedException e) { } Sound.beep(); } } }This loop makes the sensor rotate back and forth in a 180 degree arc. In a separate thread we continuously gather data which we then translate into coordinates on the LCD and write it out.

We noted that the sensor isn't very accurate when facing at an angle to a flat surfaces, and when the sensor sweeped over a flat surface the surface would appear curved on the screen. This is something we'll have to take into consideration later on. Also, we noticed the limits of the NXT' computing capacity, as it didn't quite manage to keep up with the data income. Though the most difficult part for it was to manage to write all the data to the LCD fast enough, so the screen flickered.

CommunicationTest

http://code.google.com/p/penemunxt/source/browse/#svn/trunk/CommunicationTestClient/src

http://code.google.com/p/penemunxt/source/browse/#svn/trunk/CommunicationTestServer/src

To solve the problem with the NXT's limited computing capacity we wanted to be able to run most of the computation on a server, in this case a computer. Also we want to be able to show the results on a real screen instead of the very limited LCD of the NXT. Thus, we had to establish a communication link.

The first comm test was just a simple app sending the data from a touch sensor to the computer through USB. When the touchsensor was pressed the computer app should exit. leJOS included some really great examples for rthis so it wasn't that hard to establish the first connection.

We extended the data transfer so that it also transmitted the data from the soundsensor aswell as the ultrasonicsensor in real time. The PC recieved the data and painted it to an applet as a graph. The PC also always sends back a status code telling the NXT if the status is OK or not, if it's not OK everything should shut down.

Then it wasn't that hard to switch to Bluetooth mode instead. The use the same connector but with different protocols:

USB:

NXTConnector conn = new NXTConnector();

conn.connectTo("usb://")

Bluetooth:

NXTConnector conn = new NXTConnector();

conn.connectTo("btspp://")

Though there are better ways to implement it which we will test later on. Anyhow we missed one important thing in the beginning, we forgot to add the reference to the BT package bluecove.jar. It took us at least one hour to figure out that this was the missing part.

Video of the CommunicationTest:

Summary

So what we've learned so far will be really important. Now we will use our know knowledge in BT communication and ultrasonic data to build a simple bot that rotates, collects US data and sends it to the computer to display it.

Stay tuned!!

/Peter And Josef

Links

http://lejos.sourceforge.net/nxt/nxj/api/index.html leJOS NXT API

http://lejos.sourceforge.net/nxt/pc/api/index.html leJOS PC API

http://lejos.sourceforge.net/nxt/nxj/tutorial/Communications/Communications.htm leJOS Communication Tutorial

2009-06-20

PenemuNXT is up and running!

We have now officially started on our project!

PenemuNXT is a project by Josef Hansson and Peter Forss. PenemuNXT means "explorer" in Indonesian, and that's what it's going to be. We are doing this project as our final main project at our school Berzeliusskolan in Linköping, Sweden. The main reason for the project is to learn more about programming and JAVA, but since we wanted a result we could see and feel for ourselves we decided to build a robot in Lego Mindstorms NXT.

The robot is in its final form going to be able to, using the UltraSonic Sensor from Lego, scan its environment and then wirelessly send it to a server which then processes the data into a map which will hopefully be reasonable close to how the environment actually looks.

The firmware leJOS provides us with the means to program the N XT using JAVA, a language we are already somewhat familiar with and which can be used in other applications as well. The code wil be open source and available through Google Code.

XT using JAVA, a language we are already somewhat familiar with and which can be used in other applications as well. The code wil be open source and available through Google Code.

While we go about on our project you'll most likely see all kinds of different smaller robots and programs we create while we familiarize ourselves with the NXT and learn new ways of programming.

Let the project begin!

Josef Hansson

Peter Forss

Links

http://code.google.com/p/penemunxt/ - All our code will be hosted here.

http://lejos.sourceforge.net/ - The firmware which allows us to program the NXT using JAVA

PenemuNXT is a project by Josef Hansson and Peter Forss. PenemuNXT means "explorer" in Indonesian, and that's what it's going to be. We are doing this project as our final main project at our school Berzeliusskolan in Linköping, Sweden. The main reason for the project is to learn more about programming and JAVA, but since we wanted a result we could see and feel for ourselves we decided to build a robot in Lego Mindstorms NXT.

The robot is in its final form going to be able to, using the UltraSonic Sensor from Lego, scan its environment and then wirelessly send it to a server which then processes the data into a map which will hopefully be reasonable close to how the environment actually looks.

The firmware leJOS provides us with the means to program the N

XT using JAVA, a language we are already somewhat familiar with and which can be used in other applications as well. The code wil be open source and available through Google Code.

XT using JAVA, a language we are already somewhat familiar with and which can be used in other applications as well. The code wil be open source and available through Google Code.While we go about on our project you'll most likely see all kinds of different smaller robots and programs we create while we familiarize ourselves with the NXT and learn new ways of programming.

Let the project begin!

Josef Hansson

Peter Forss

Links

http://code.google.com/p/penemunxt/ - All our code will be hosted here.

http://lejos.sourceforge.net/ - The firmware which allows us to program the NXT using JAVA

Labels:

lego,

mindstorms,

nxt,

penemunxt

Prenumerera på:

Inlägg (Atom)